AI Can’t Fix Bad Learning

Why pedagogy and good learning design still come first, and why faster isn't always better.

I’ve followed Dr. Philippa Hardman’s work for years, and every time I engage with her work, I find it both refreshing and deeply grounded.

As one of the leading voices in learning design, Philippa has been able to cut through the noise and focus on what truly matters: designing learning experiences that actually work.

In an era where AI promises speed and scale, Philippa is making a different argument: faster isn’t always better. As the creator of Epiphany AI—figma for learning designers—Philippa is focused on closing the gap between what great learning design should look like and what’s actually being delivered.

While many AI tools optimize for the average, she believes the future belongs to those who can leverage AI without compromising on expertise or quality. Philippa wants learning designers to be more ambitious using AI to achieve what wasn’t possible before.

In this conversation, we explore why pedagogy must lead technology, how the return on expertise is only increasing in an AI-driven world, and why building faster doesn’t always mean building better.

(The interview has been edited and condensed for clarity)

How did you get into learning design?

I didn’t set out to be a learning designer—far from it. I actually ask this same question to the participants in my learning design bootcamps, and it’s always fascinating how varied the paths are.

My own path startedd in academia. I was focused on digital humanities at the University of Sheffield, exploring how technology could expand access to education. I went on to do a PhD there, followed by a postdoc at Cambridge.

It was during that time I realized something that struck me deeply. Despite all the research we had on how humans learn, very little of it was making its way into classrooms or corporate training rooms.

That gap is what drew me into learning design. I believed—and still do—that if we could make learning science more accessible and translate it into practice, we could fundamentally improve the way we design learning experiences.

Right now, we tend to obsess over the bricks—the individual pieces—without thinking enough about the engineering of the entire house.

Corporate L&D is estimated at over $300 billion a year, yet I’d argue that 90% of it yields little real return from a learning perspective. I can’t think of another industry where that kind of inefficiency would be tolerated.

I stepped into this space with a clear hypothesis: we can do better, and learning science is the key.

What do you think are the biggest problems in learning design right now?

First, at an industry level, learning design is still an emerging discipline. There’s no formal route into the field, no universally recognized certifications, and the tooling is fragmented. In many ways, it reminds me of where UX design was 10 or 15 years ago—growing rapidly, but without the structure or professionalization it needs.

The second big challenge is one of capacity. There’s simply more to do than can reasonably be done.

Then there’s the question of quality. Too often, the focus is on the quality of the content itself rather than on the quality of learning outcomes. Part of this comes down to a lack of understanding about how to apply learning science in practice. But it’s also driven by KPIs that prioritize speed over effectiveness. We’re measured on how quickly we can roll something out, not on whether it actually works.

And finally, we’re constrained by technology. Most learning management systems are still operating within a paradigm that’s barely changed in 30 years. The tools we have often aren’t fit for the kind of learning experiences we’re trying to design today.

How does the rise of Generative AI impact both the design of learning experiences and the learning experience itself?

In my view, the impact plays out in two distinct ways: speed and quality.

On the speed front, we’re undoubtedly seeing progress. What was once slow and expensive is now faster and cheaper. Tools like Synthesia are widely used in corporate L&D to accelerate content creation and development. But much of that acceleration has happened in the production phase—less so in the actual design of learning experiences.

That’s where I see a real concern. Many people are being given free regin to use generic AI tools, but with little to no guidance on how to use them well. And because we still lack a shared understanding of what “great” learning design looks like, the outcomes are often mediocre.

I recently ran a controlled test comparing an experienced human learning designer working with and without ChatGPT. What we found was telling: for experts, AI made the process faster without sacrificing quality. But for non-experts, the quality actually declined. Generative AI tends to give you the average answer—not the optimal one.

In essence, GenAI is helping us make things cheaper and faster, but it’s not necessarily making them better. It hasn’t fundamentally transformed the learning experience for learners—at least, not in a positive way yet.

What are the key principles of designing effective learning journeys in the age of AI?

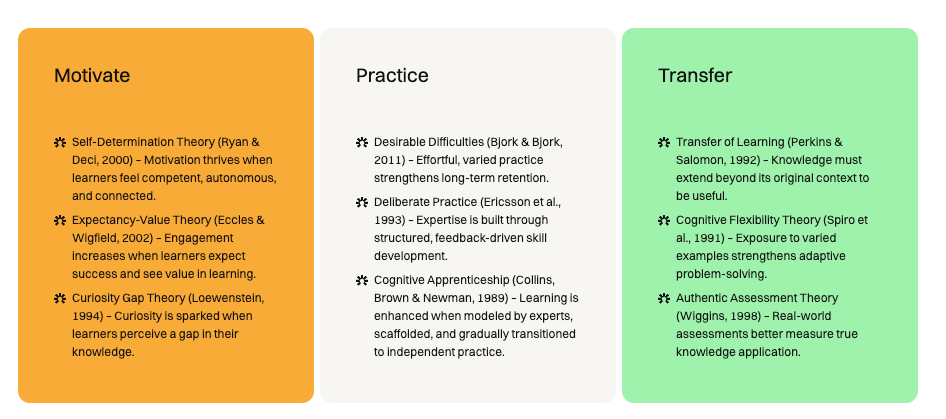

When we look at the latest research in learning science, three core principles consistently stand out—whether we’re designing with or without AI.

First, we need to design for intrinsic motivation. Learners have to see a clear answer to the question, “What’s in it for me?” Motivation isn’t a nice-to-have—it’s foundational.

We need to take this seriously and draw from key frameworks like Self-Determination Theory, which not only fosters motivation but also encourages curiosity. Without that internal drive, learning simply doesn’t stick.

Second, we need to design for practice. More specifically, for active learning through doing. We’ve known this for a long time—constructionist approaches, deliberate practice, spaced repetition—they all outperform passive forms of learning. But in practice, many learning journeys still rely too heavily on content consumption rather than applied learning.

And third, we need to design for transfer. The ultimate goal of learning is the ability to apply knowledge and skills in different, often unpredictable contexts. Yet, we rarely design for effective transfer. That requires authentic, contextualized assessment and feedback— learning experiences that mirror real-world application and provide meaningful, timely feedback.

If I had to distill it down: Motivate. Practice. Transfer. These are the pillars of any effective learning journey.

How should we rethink learner motivation and assessment in AI-driven learning environments?

GenAI gives us an unprecedented opportunity to understand what motivates our learners. If we design learning experiences that align with what learners want and what they struggle with, we can drive much deeper engagement. But it starts with data.

In this vein, one of the more exciting developments is the potential for AI to simulate learner behavior. There was a recent study on GPT-4o that showed, with the right data, it can reliably predict how learners will respond. That means we can now use AI to act as a kind of proxy for our target learner—giving us valuable clues about how they might engage with the experience. But it also pushes us to take learner data more seriously and use it more thoughtfully.

When it comes to assessment, what we’re mostly seeing is more of the same, just happening faster. But speed doesn’t equal progress.

The research is clear: good assessment needs to be authentic and as close to real life as possible. AI can help us design those kinds of assessments rapidly. The challenge is knowing what “good” really looks like—and that’s where human expertise becomes critical.

As I said before, AI, by default, aims for the average. In learning design, the current average isn’t good enough. The return on expertise is only going up. If we want to create high-impact learning in an AI-driven world, skilled human judgment matters more than ever.

You’re working on Epiphany AI, which you describe as a ‘pedagogy first’ AI co-pilot for instructional design. First, what is a pedagogy-first approach? Second, what problem is Epiphany solving, and what traction are you seeing today?

With Epiphany we are thinking deeply about the learning design layer of AI. Most tools in the space are focused on building faster. We’re focused on building better—on helping people create more optimal learning experiences.

Where many AI tools are like Canva for learning design—targeted at non-experts—we see Epiphany as more like Figma. It’s designed to augment the capabilities of professionals.

We’re building for people who do this work for a living and want to do it more not only more efficiently, but also more effectively.

We think of Epiphany as a co-pilot, rather than a tool that automates the work entirely. It surfaces the key information designers need to make better decisions. It provides the research to justify those decisions—helping people become genuinely evidence-based practitioners. The output is a storyboard that enables fast, seamless collaboration.

Moving to the second part of your question, a pedagogy-first approach means the mode of delivery is critical. Epiphany doesn’t just design generically for online or face-to-face—it makes opinionated recommendations. It will tell you if it disagrees with your approach, even though you always have the final say.

It’s still a work in progress. My co-founder, Gianluca, and I have been working on it for two years, with extensive feedback from corporate L&D. We released it just a few weeks ago, and we already have more than 1,000 enterprises on the waitlist.

I hope you enjoyed the last edition of Nafez’s Notes.

I’m constantly refining my personal thesis on innovation in learning and education. Please do reach out if you have any thoughts on learning - especially as it relates to my favorite problems.

If you are building a startup in the learning space and taking a pedagogy-first approach - I’d love to hear from you. I’m especially keen to talk to people building in the assessment space.

Finally, if you are new here you might also enjoy some of my most popular pieces:

The Gameboy instead of the Metaverse of Education - An attempt to emphasize the importance of modifying the learning process itself as opposed to the technology we are using.

Using First Principles to Push Past the Hype in Edtech - A call to ground all attempts at innovating in edtech in first principles and move beyond the hype

We knew it was broken. Now we might just have to fix it - An optimistic view on how generative AI will transform education by creating “lower floors and higher ceilings”.

10,000 thanks for sharing this interview. Dr. Phil’s genius – particularly her perspective about using AI to support instructional design that changes outcomes for learners – is a bright light for me as I am developing a course to teach seniors how to write and produce short personal stories using BookCreator so they can earn a new seat at the table in their friendships and families in these chaotic times. I’m working as a volunteer instructor at the Osher lifelong learning Institute after a long career in all kinds of settings. It seems to me vital that my peers and I take more of a teaching role as education institutions dissolve here in the US and I’m committed to helping my elder students use story to teach from their experiences. I’m a lifelong Dewey devotee.

Thank you thank you Nafez! Such a lovely read. This line has stuck with me - "AI, by default, aims for the average."

I've subscribed to follow your thoughts :)