Building the Public Infastructure for AI in Education

Making sure AI for learning is transparent, auditable and community driven

It’s not yet possible to know the true impact of AI on learning. Like most technologies we tend to overestimate the short-term “transformation” and underestimate the long-term consequences of minor choices and the trajectories we create.

Most of the excitement around AI in education is currently around the growing suite of private chatbots and other tools waiting to “disrupt” learning. There’s been less emphasis on creating more community-driven and open solutions - the public infrastructure needed to create a large enough tide to raise all boats.

In that light, I sat down with Yusuf Ahmed, co-founder of Playlab AI to learn more about their journey down this path.

Yusuf has always championed using “first principles” to push past the hype in edtech and in this chat brings that lens to ensuring AI can be on a trajectory to serve as many learners as possible.

(The interview has been edited & condensed for clarity)

How did you first get involved in education & learning?

I first became involved in education when I found myself working directly with the President of the American University in Cairo (AUC) during the Egyptian revolution.

This was a significant period, marked by the rise of MOOCs, and we explored whether we could harness that momentum to shift towards more project-based learning, using technology as a catalyst.

Unfortunately, despite the overwhelming interest, traditional teaching methods prevailed. This experience left me questioning what it truly takes to change education systems and institutions.

My perspective shifted when I had the opportunity to join the founding team of the African Leadership University (ALU) to lead the development of their degree granting programs. This was a unique chance to envision what a 21st-century university could look like if built from the ground up.

One of the pivotal moments at ALU was when we were designing the computer science course. We had set out to use parts of CS50 from edX.

Interestingly, we noticed that the course started with Scratch, which initially puzzled us: why would a university class start with a children’s programming language? We soon realized that this approach enabled students to focus on logic before syntax and to discover the power of computing immediately.

As a result, students from different backgrounds persevered through challenges and the course, fostering a resilient mindset.

This realization led me to discover constructionism, which eventually pushed me to do a degree with Mitch Resnick and the Lifelong Kindergarten group at MIT.

Why did you start Playlab AI?

Our goal is to democratize who gets to shape how AI is used in education so that this technology benefits all, not just a select few.

Before Playlab, I led product development for Teach For America (TFA), one of the largest nonprofits in the U.S. It was during this period that ChatGPT was launched.

I began experimenting with AI, but noticed that many educators whom I deeply respect were hesitant to engage with it. This led my co-founder Ian Serlin and I to create a sandbox environment where they could play, tinker, and explore AI's possibilities and limitations without any pressure.

This space was crucial in allowing educators to think critically and creatively about AI, and it became our primary motivation.

We were quickly surprised, however, when teachers pushed past our sandbox environment and began deploying actual projects that impacted teaching and learning.

What struck us most was not just the AI tools they built; but how they put those tools to work to transform teaching and learning.

They both built tiny, niche products that market incentives wouldn’t encourage venture-backed companies to solve; as well as their own unique spin on tools that were on the market, but that had much deeper impact when they were deeply contextualized to meet their needs and goals.

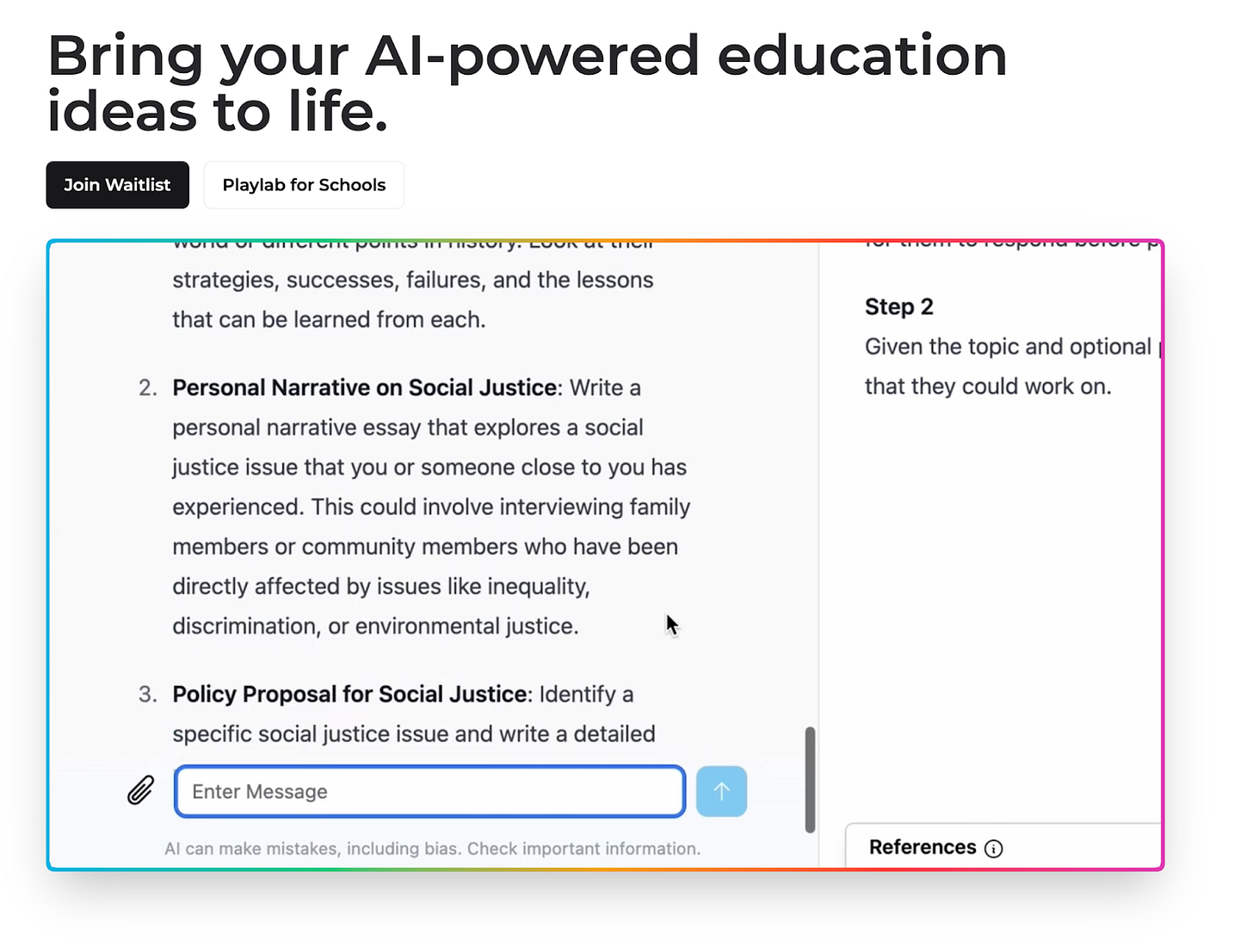

Today we operate on two levels. First, Playlab serves as a sandbox where people can explore and learn about AI by creating it. Second, it functions as a creator tool, enabling users to develop AI tools that can be directly applied in the classroom.

Why did you go down the non-profit route instead of launching a startup?

As educators began to build within this sandbox, we were genuinely impressed by what they were creating. The idea of turning this into a startup crossed our minds, but we were concerned that taking the venture capital route would pressure us to scale rapidly - reinforcing existing educational infrastructures without fully understanding AI's implications.

We still aren’t convinced that prioritizing speed is the right approach at this nascent stage of AI. Being a nonprofit, allows us to prioritize thoughtful exploration over rapid expansion.

We are at a pivotal moment where creating educational tools is becoming more accessible, and our mission is to ensure that this creativity is both responsible and innovative.

Where are you today with PlayLabAI?

We’re in private beta, testing our technology with a select group of partners.

Our community has grown to 17,000 creators - including educators, entrepreneurs, and a small number of students in places with appropriate guardrails. Collectively, they have published over 18,000 AI-powered tools and experiences that have been used by over 200,000 people - from teachers and students in New York City Public Schools to educators in Western Uganda’s largest Refugee Camp, Coburwas.

While we want to open up Playlab so that anyone can sign-up, we’ve seen that even well-intentioned people can create harm with AI; and we’re not interested in encouraging the proliferation of tools that simply reinscribe practices that don’t actually push forward teaching and learning.

We’ll be opening up more soon as we scale our professional learning partnerships to better support people in building both responsibly and thoughtfully.

You’ve described your work at Playlab as “building public infrastructure for education in the age of AI” Can you elaborate on that vision?

When I describe our work as “building public infrastructure for education,” I’m emphasizing our commitment to making AI in education transparent - so everyone understands how it works - auditable - so its impacts can be scrutinized, and community-driven - allowing educators and learners to shape the technology according to their needs, rather than having those needs dictated to them.

We could have easily created Playlab as a closed platform—similar to something like Teachers Pay Teachers, Facebook, or Amazon. But we chose a different path.

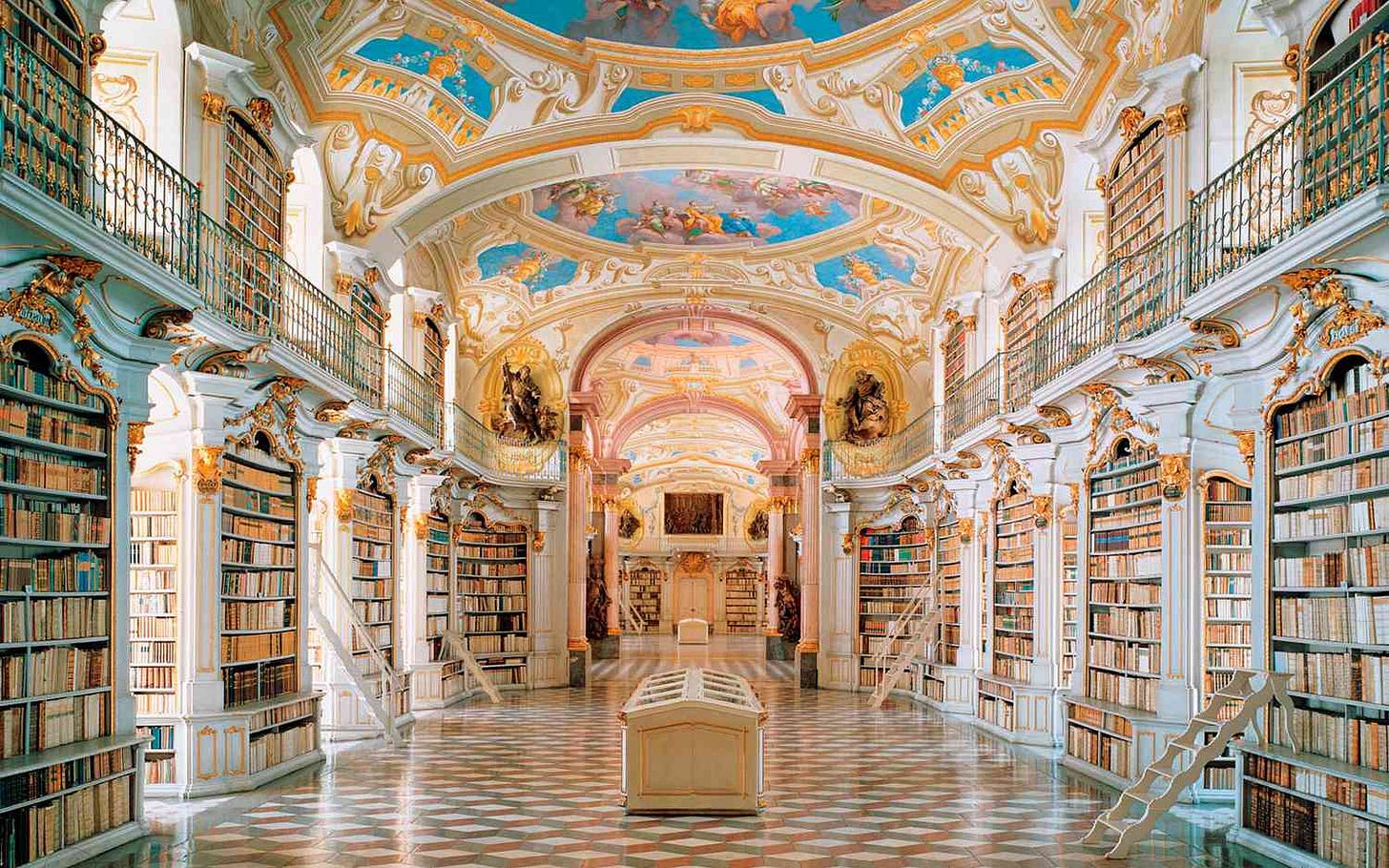

Consider a world where libraries weren’t public institutions. In such a scenario, access to books would depend on purchasing them or subscribing to a service.

We see the same risk with AI in education: if AI tools and resources are locked behind paywalls or controlled by a few corporations, the gap between those who have access and those who don’t will only widen.

How do we make sure all this talk about changing education is relevant to communities around the world with limited resources?

First, we leverage income from the Global North to subsidize our initiatives in the Global South, ensuring that those who need it most can access our tools and resources.

Second, we’re committed to collaborating with open-source AI technologies that are optimized for Android devices, prioritizing local-first solutions that are less dependent on high compute power and bandwidth—factors that can be significant barriers in resource-constrained environments.

Third, equally important is our collaboration with local stakeholders. We’re working on R&D moonshots early on, directly involving communities on the ground to create solutions that are not only innovative but also truly tailored to their needs.

A great example of how we’re making an impact is the work of Emmanuel Kimuli, the lead teacher at Coburwas, the largest refugee school in Western Uganda.

Emmanuel faces the immense challenge of managing class sizes ranging from 60 to 110 students, most of whom are refugees from South Sudan or the Congo. His teaching staff, largely uncredentialed, struggle with limited resources and significant pedagogical challenges.

Emmanuel uses Playlab daily to support his junior teachers with lesson reviews, helping them internalize the content they’ll be teaching and develop strategies for managing such large classrooms effectively. Importantly, he doesn’t do this alone – he’s part of a pan-African learning community spearheaded by the African Leadership Academy in South Africa.

What has been the most difficult part about building and scaling PlayLabAI?

The hardest thing has been to focus. We are very early in this very nascent ecosystem. We can’t serve everybody’s needs - even just in learning.

To help us prioritize, we’ve refined the three key problems we try to solve, and in turn try to align our decision making with those problems in mind.

These three problems are:

Problem 1: Educators are ill-equipped to responsibly use AI.

Educators struggle to keep up with AI advancements, lacking a safe space to explore these technologies' promises and risks.

The stakes are especially high, because unlike previous technologies which required intentional adoption to be introduced to schools (e.g. 1:1 devices or internet), AI is an "arrival technology"—already present in schools through students, educators, and integrated products, raising the stakes for responsible use.

Problem 2: Limited agency to create context-specific tools.

Most educators globally lack the skills or resources to create tools that address their unique challenges. Instead, they rely on distant software developers, which limits the development of highly niche, impactful tools, especially in nonprofits where the gap with the private sector is widening.

Problem 3: Closed data hinders equity, bias reduction, and research.

Most AI edtech tools are built on proprietary, opaque models that can't be easily examined for bias, posing equity risks. In contrast, open-source models are transparent but not yet ready for educational use, as they need better data to be effective.

I hope you enjoyed the last edition of Nafez’s Notes.

I’m constantly refining my personal thesis on innovation in learning and education. Please do reach out if you have any thoughts on learning - especially as it relates to my favorite problems.

If you are building a startup in the learning space and taking a pedagogy-first approach - I’d love to hear from you.

Finally, if you are new here you might also enjoy some of my most popular pieces:

The Gameboy instead of the Metaverse of Education - An attempt to emphasize the importance of modifying the learning process itself as opposed to the technology we are using.

Using First Principles to Push Past the Hype in Edtech - A call to ground all attempts at innovating in edtech in first principles and move beyond the hype

We knew it was broken. Now we might just have to fix it - An optimistic view on how generative AI will transform education by creating “lower floors and higher ceilings”.

This reflects a thoughtful and inclusive strategy to harness AI for educational purposes while addressing significant equity issues. By focusing on accessibility, community involvement, and transparent practices, you’re paving the way for more equitable and impactful educational innovations.